Glass.AI, Company Databases and the LLMs: Three Very Different Approaches to Business Research.

As AI becomes embedded in research, market analysis, and policy work, organisations are increasingly confronted with a confusing choice of tools. Traditional company databases promise structured coverage. Large Language Models (LLMs) promise speed and fluency. Glass.AI takes a third path: evidence-led, transparent AI built for deep and wide research.

Although these tools are often discussed interchangeably, they are fundamentally different in how they source information, how reliable their outputs are, and what kinds of questions they can safely answer.

Understanding these differences is critical when accuracy, traceability, and decision confidence matter.

The context: more data, more AI, more risk

The volume of information on the web is growing at an unprecedented rate, with an increasing proportion now generated or summarised by AI. At the same time, well‑documented issues with hallucinations and unverifiable outputs from LLMs persist. While many consumer use cases can tolerate this, enterprise and government research cannot.

Business research requires evidence, traceability and consistency. This is where the limitations of both company databases and general‑purpose GenAI tools become clear.

Company databases: structured, but static and shallow

Company databases are built around curated records: legal entities, financials, ownership structures, and standard classifications. Their strength lies in consistency and ease of querying. For well-established companies in mature markets, they provide a dependable baseline.

However, databases are inherently static representations of a dynamic world. Updates lag reality, coverage is uneven outside formal registries, and qualitative signals — such as emerging technologies, strategic shifts, or early adoption — are often missing or heavily delayed. They are also frequently shallow, providing only high-level data rather than deeper insights into operations, strategy, or innovation. Databases tell you what is registered, not necessarily what is happening.

They work well for compliance checks and high-level market sizing, but struggle with early-stage discovery, fast-moving sectors, or nuanced research questions where the details matter.

LLMs and GenAI tools: fluent answers, fragile foundations

LLMs have changed expectations around speed and accessibility. They can summarise topics, generate reports, and answer complex-sounding questions in seconds. For exploratory thinking or drafting, they are undeniably powerful.

But LLMs do not “know” companies in any grounded sense. They generate outputs by predicting likely text based on patterns in training data or retrieved snippets. Sources are often opaque, reasoning is hidden, and outputs can be plausible but wrong. When tasks require multiple steps — finding sources, validating claims, linking evidence, errors compound quickly.

This makes LLMs fundamentally risky for research workflows where correctness, reproducibility, and auditability are required. At scale, they tend to produce second-hand research: summaries and partial views of what might be true, rather than verified accounts of what is.

Glass.AI: evidence-led, transparent research

Glass.AI is designed for a different class of problem. Rather than starting from models or pre-defined records, it builds knowledge directly from primary public evidence. The platform continuously crawls millions of sources — company websites, government portals, news, filings, case studies, job listings, and more — and extracts structured facts with full provenance.

Every claim can be traced back to its original source. Evidence is cross-validated across multiple sources and models. Where ambiguity or risk is highest, human review is applied. The result is not a generated answer, but a constructed, auditable dataset that supports novel analysis.

This enables deep and wide research at scale: tracking emerging sectors, identifying technology adoption, monitoring market entry, or supporting policy and strategic decisions with confidence.

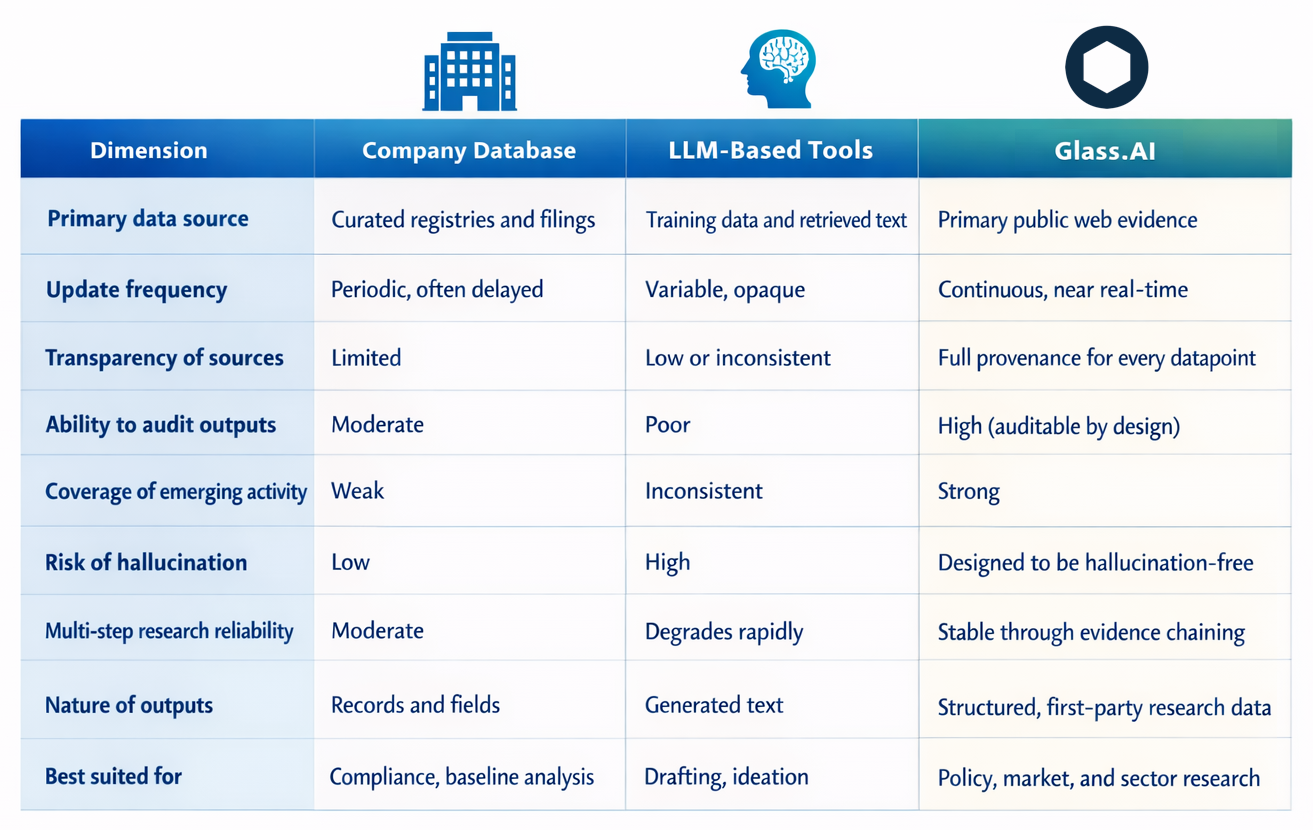

A side-by-side comparison

The differences between these approaches become clearest when viewed together:

Why this matters for enterprises and governments

As AI tools move closer to decision-making, the cost of error increases. In policy analysis, due diligence, or market strategy, it is not enough for outputs to sound convincing. Decisions must be based on evidence that can be checked, explained and defended.

At its core, Glass.AI is an evidence‑led AI research capability, not a generative assistant and not a static database.

What makes Glass.AI different:

1. Evidence drives the output

Insights are built from real, identifiable sources — company websites, news, social channels and regulator publications — not from probabilistic text generation.

2. Full data provenance

Every insight can be traced back to its original source. This enables validation, auditing and compliance; a critical requirement for regulatory and risk‑focused use cases.

3. Deep understanding of activities

Glass.AI focuses on what companies actually do: products, services, market activity and regulatory exposure, rather than high‑level classifications.

4. Continuous monitoring

Unlike point‑in‑time tools, Glass.AI can be configured for ongoing tracking, delivering alerts as new evidence appears.

5. Scale and coverage

The platform reads millions of sources across geographies, sectors and languages, including official regulatory sites.

This is why Glass.AI is used by governments and corporates to track companies, sectors and regulations at scale. It can complement GenAI tools by providing a reliable evidence layer that those tools lack.

Conclusion: different tools for different jobs

These tools are not mutually exclusive. Many organisations use all three. But they should be used for what they are good at, and effective workflows might combine all three:

Use company databases for static facts and compliance checks.

Use LLMs and GenAI tools for drafting and exploratory thinking.

Use Glass.AI when the task is research, discovery, tracking and analysis that must stand up to scrutiny.

As data volumes grow and AI‑generated content proliferates, the ability to distinguish evidence from generated text is becoming a strategic advantage. Glass.AI represents a structural alternative, designed not for answers that sound right, but for research that can be trusted.

If you’re interested in our discovery and tracking research capability, you can contact us at info@glass.ai.