Missing Links: Overcoming the Limitations of Large Language Models.

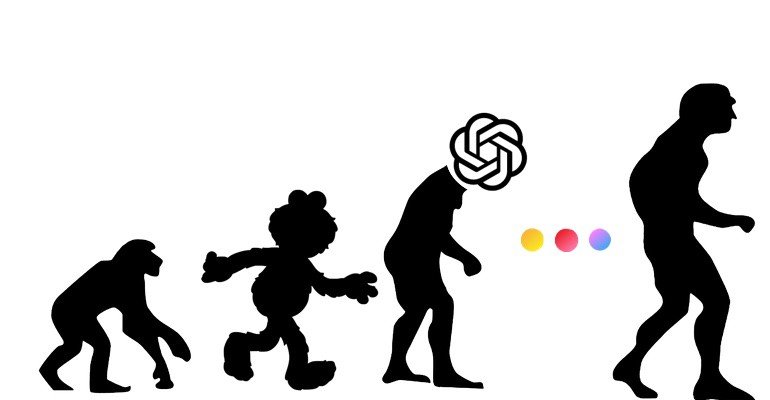

When we first discussed the large language model developments from OpenAI, we likened GPT-2 to a — very impressive — parlour trick and discussed the need for improvements in benchmarks that test language understanding. Since that time, benchmarks have evolved and more comprehensive tests of the capabilities of language models and their ability to understand have been developed, such as the collaborative BIG-bench project. Likewise, as has been seen by the response to ChatGPT, large language models have continued to be scaled and evolve to produce dramatic results.

Although the models are becoming increasingly eloquent, they suffer from the same limitations of the earlier work and likewise perform poorly on more recent benchmark sets. No surprise as they are based on scaling the same approach, predicting the most likely next word given the words that precede it. Fundamental to these limitations has been the ability of ChatGPT to make stuff up. Many examples of the impact of this have been shared online, such as Stack Overflow being overwhelmed by incorrect answers, CNET creating articles with significant inaccuracies, and the failed demo of Google’s ChatGPT alternative Bard. Even the “successful” demo that launched the ChatGPT-enabled interface to Bing contained many inaccuracies that no one seemed to notice because they looked so darn plausible. Ultimately, Large Language Models (LLMs) are ‘guessing’ the likely answer to these questions based on what has been included in their training data. This means sometimes they can seem incredibly perceptive when what is being asked is broad and very well represented in the training data, but they can start to look like a pathological liar when trying to give more detailed explanations or the response was not well represented in the training data.

Lost in the focus on the issues resulting from using LLMs as an information retrieval tool have been the impressive strides in other areas. Previous iterations seemed to lose their thread after a couple of sentences, but now they are able to produce longer and more coherent output. Among other use cases, they would currently seem well placed to be used as tools for the summarisation of specific documents or to help with the generation of content for human review. As a more general platform for language understanding, without further support, they quickly break down.

What we have seen as a response to the various issues that have been highlighted through the ChatGPT demo and the integration with Bing search has been the introduction of guardrails. These are rules to catch specific use cases that have been identified as likely to lead to incorrect or misleading results. However, the brittleness of this approach has been exposed by people gaming the system to produce even more outlandish results, most amusing of which led to Bing Chat announcing its own internal directives. Although Heath Robinson would be proud, it seems clear that Guardrails are not the solution. Rather than accounting for more and more exceptions, some fundamental capabilities — such as the continuous learning of a knowledge base, and a little linguistics to support some common sense— are required at the heart of machine reading if we are to avoid death by a thousand cuts.

Through learning small precise linguistic models and applying these models across core NLP tasks, such as text classification, word disambiguation, entity recognition, information extraction, semantic role labelling, entity linking, and sentiment analysis, at glass.ai we have been able to explore, categorise, summarise, and map huge chunks of the open web. This large-scale consumption of text has demonstrated that the precision of these models means they can generalise to and ultimately understand very large bodies of unseen content. Finding ways of bridging the gap between these capabilities and the fluency of large language models seems like an important link towards more robust language understanding solutions that can be trusted.